Job Groups#

Warning

This is an experimental feature. The interface may change in future versions.

Tip

Job Groups are ideal for heterogeneous parallel workloads where multiple tasks with different resource requirements need to run together and communicate with each other.

Job Groups allow you to run multiple related tasks in parallel as a single managed unit. Unlike managed jobs which run tasks sequentially (pipelines), Job Groups launch all tasks simultaneously, enabling complex distributed architectures.

Common use cases include:

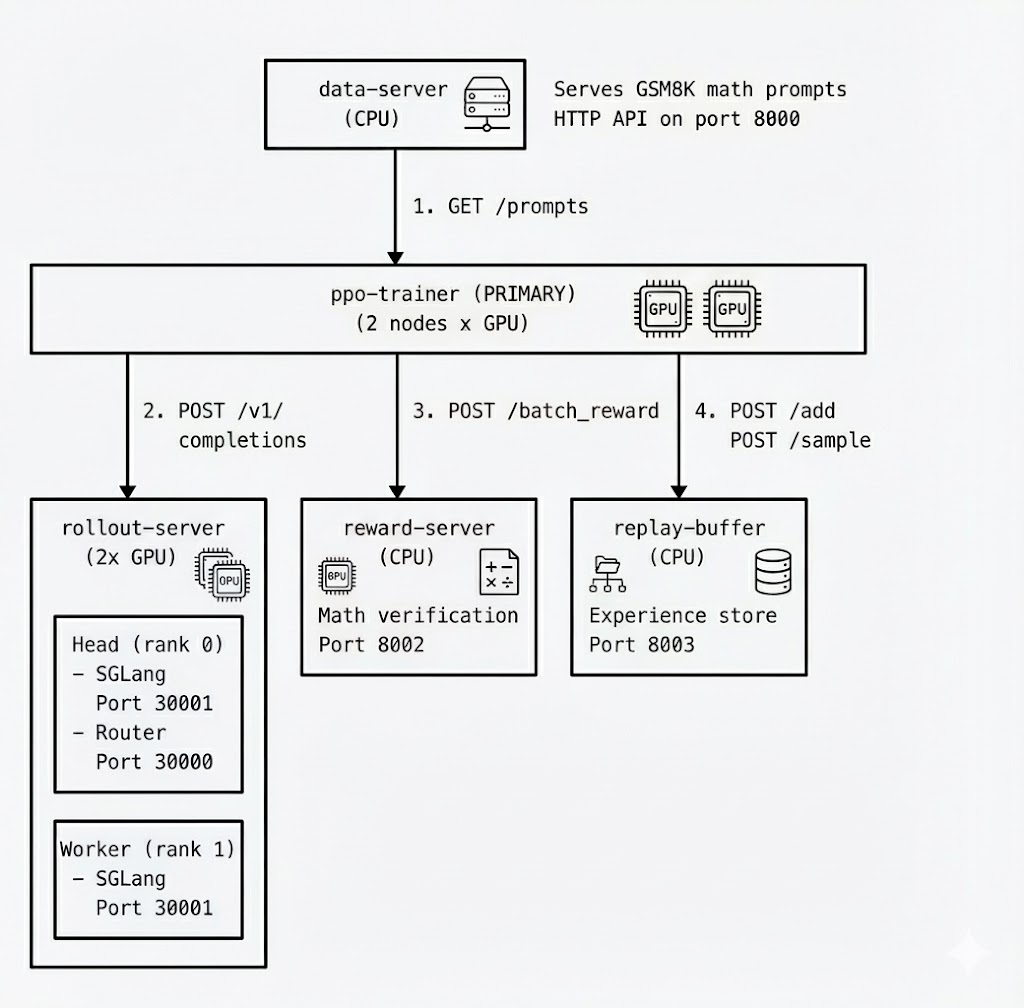

RL post-training: Separate tasks for trainer, reward modeling, rollout server, and data serving

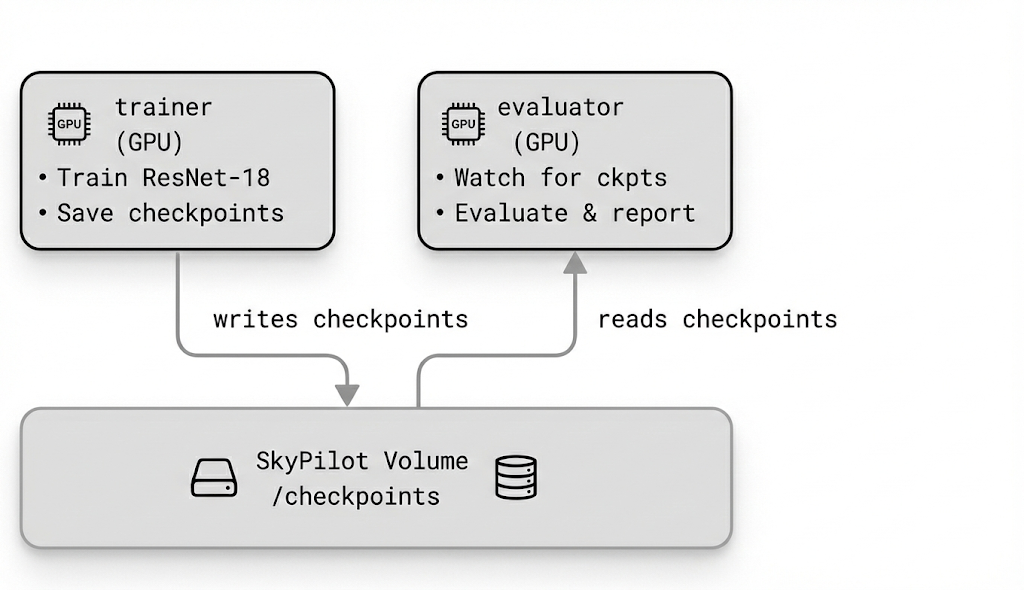

Parallel train-eval: Training and evaluation running in parallel with shared storage

Example: RL post-training architecture where each component (ppo-trainer, rollout-server, reward-server, replay-buffer, data-server) runs as a separate task within a single Job Group. Tasks can have different resource requirements and communicate via service discovery.#

Contents

Creating a job group#

A Job Group is defined using a multi-document YAML file. The first document is the header that defines the group’s properties, followed by individual task definitions:

# job-group.yaml

---

# Header: Job Group configuration

name: my-job-group

execution: parallel # Required: indicates this is a Job Group

---

# Task 1: Trainer

name: trainer

resources:

accelerators: A100:1

run: |

python train.py

---

# Task 2: Evaluator

name: evaluator

resources:

accelerators: A100:1

run: |

python evaluate.py

Launch the Job Group with:

$ sky jobs launch job-group.yaml

Header fields#

The header document supports the following fields:

Field |

Default |

Description |

|---|---|---|

|

Required |

Name of the Job Group |

|

Required |

Must be |

|

None |

List of task names that are “primary”. Tasks not in this list are “auxiliary” - long-running services (e.g., data servers, replay buffers) that wait for a signal to terminate. When all primary tasks complete, auxiliary tasks are terminated. If not set, all tasks are primary. |

|

None |

Delay before terminating auxiliary tasks when primary tasks complete,

allowing them to finish pending work (e.g., flushing data). Can be a

string (e.g., |

Each task document after the header follows the standard SkyPilot task YAML format.

Note

Every task in a Job Group must have a unique name. The name is used for service discovery and log viewing.

Service discovery#

Tasks in a Job Group can discover each other using hostnames. SkyPilot automatically configures networking so that tasks can communicate.

Hostname format#

Each task’s head node is accessible via the hostname:

{task_name}-0.{job_group_name}

For multi-node tasks, worker nodes use:

{task_name}-{node_index}.{job_group_name}

For example, in a Job Group named rlhf-experiment with a 2-node trainer task:

trainer-0.rlhf-experiment- Head node (rank 0)trainer-1.rlhf-experiment- Worker node (rank 1)

Environment variables#

SkyPilot injects the following environment variables into all tasks:

Variable |

Description |

|---|---|

|

Name of the Job Group |

Example usage in a task:

# Access the trainer task from the evaluator using the hostname

curl http://trainer-0.${SKYPILOT_JOBGROUP_NAME}:8000/status

Viewing logs#

View logs for a specific task within a Job Group:

# View logs for a specific task by name

$ sky jobs logs <job_id> trainer

# View logs for a specific task by task ID

$ sky jobs logs <job_id> 0

# View all task logs (default)

$ sky jobs logs <job_id>

When viewing logs for a multi-task job, SkyPilot displays a hint:

Hint: This job has 3 tasks. Use 'sky jobs logs 42 TASK' to view logs

for a specific task (TASK can be task ID or name).

Examples#

RL post-training architecture#

This example demonstrates a distributed RL post-training architecture with 5 tasks:

---

name: rlhf-training

execution: parallel

---

name: data-server

resources:

cpus: 4+

run: |

python data_server.py

---

name: rollout-server

num_nodes: 2

resources:

accelerators: A100:1

run: |

python rollout_server.py

---

name: reward-server

resources:

cpus: 8+

run: |

python reward_server.py

---

name: replay-buffer

resources:

cpus: 4+

memory: 32+

run: |

python replay_buffer.py

---

name: ppo-trainer

num_nodes: 2

resources:

accelerators: A100:1

run: |

python ppo_trainer.py \

--data-server data-server-0.${SKYPILOT_JOBGROUP_NAME}:8000 \

--rollout-server rollout-server-0.${SKYPILOT_JOBGROUP_NAME}:8001 \

--reward-server reward-server-0.${SKYPILOT_JOBGROUP_NAME}:8002

See the full RL post-training example at llm/rl-post-training-jobgroup/ in the SkyPilot repository.

Primary and auxiliary tasks#

In many distributed workloads, you have a main task (e.g., trainer) and supporting services (e.g., data servers, replay buffers) that run indefinitely until the main task signals completion. These supporting services are “auxiliary tasks” - they don’t have a natural termination point and need to be told when to shut down.

Use primary_tasks to designate which tasks drive the job’s lifecycle. Auxiliary

tasks (those not listed) will be automatically terminated when all primary tasks

complete:

---

name: train-with-services

execution: parallel

primary_tasks: [trainer] # Only trainer is primary

termination_delay: 30s # Give services 30s to finish after trainer completes

---

name: trainer

resources:

accelerators: A100:1

run: |

python train.py # Primary task: job completes when this finishes

---

name: data-server

resources:

cpus: 4+

run: |

python data_server.py # Auxiliary: terminated 30s after trainer completes

When the trainer task finishes, the data-server (auxiliary) task will receive a termination signal after the 30-second delay, allowing it to flush pending data or perform cleanup.

Current limitations#

Co-location: All tasks in a Job Group run on the same infrastructure (same Kubernetes cluster or cloud zone).

Networking: Service discovery (hostname-based communication between tasks) currently only works on Kubernetes. On other clouds, tasks can run in parallel but cannot communicate with each other using the hostname format.

Note

Job Groups require execution: parallel in the header. For sequential task

execution, use managed job pipelines instead (omit the

execution field or set it to serial).

See also

Managed Jobs for single tasks or sequential pipelines.

Distributed Multi-Node Jobs for multi-node distributed training within a single task.