Managed Jobs#

Tip

This feature is great for scaling out: running a single job for long durations, or running many jobs in parallel.

See also

Using a Pool of Workers for running batch inference workloads across multiple infrastructure.

Job Groups for running multiple heterogeneous tasks in parallel that can communicate with each other.

SkyPilot supports managed jobs (sky jobs), which can automatically retry failures, recover from spot instance preemptions, and clean up when done.

To start a managed job, use sky jobs launch:

$ sky jobs launch -n myjob hello_sky.yaml

Task from YAML spec: hello_sky.yaml

Managed job 'myjob' will be launched on (estimated):

Considered resources (1 node):

------------------------------------------------------------------------------------------

INFRA INSTANCE vCPUs Mem(GB) GPUS COST ($) CHOSEN

------------------------------------------------------------------------------------------

AWS (us-east-1) m6i.2xlarge 8 32 - 0.38 ✔

------------------------------------------------------------------------------------------

Launching a managed job 'myjob'. Proceed? [Y/n]: Y

... <job is submitted and launched>

(setup pid=2383) Running setup.

(myjob, pid=2383) Hello, SkyPilot!

✓ Managed job finished: 1 (status: SUCCEEDED).

Managed Job ID: 1

📋 Useful Commands

├── To cancel the job: sky jobs cancel 1

├── To stream job logs: sky jobs logs 1

├── To stream controller logs: sky jobs logs --controller 1

└── To view all managed jobs: sky jobs queue

The job is launched on a temporary SkyPilot cluster, managed end-to-end, and automatically cleaned up.

Managed jobs have several benefits:

Use spot instances: Jobs can run on auto-recovering spot instances. This saves significant costs (e.g., ~70% for GPU VMs) by making preemptible spot instances useful for long-running jobs.

Scale across regions and clouds: Easily run and manage thousands of jobs at once, using instances and GPUs across multiple regions/clouds.

Recover from failure: When a job fails, you can automatically retry it on a new cluster, eliminating flaky failures.

Managed pipelines: Run pipelines that contain multiple tasks. Useful for running a sequence of tasks that depend on each other, e.g., data processing, training a model, and then running inference on it.

Contents

Create a managed job#

A managed job is created from a standard SkyPilot YAML. For example:

# bert_qa.yaml

name: bert-qa

resources:

accelerators: V100:1

use_spot: true # Use spot instances to save cost.

envs:

# Fill in your wandb key: copy from https://wandb.ai/authorize

# Alternatively, you can use `--env WANDB_API_KEY=$WANDB_API_KEY`

# to pass the key in the command line, during `sky jobs launch`.

WANDB_API_KEY:

# Assume your working directory is under `~/transformers`.

# To get the code for this example, run:

# git clone https://github.com/huggingface/transformers.git ~/transformers -b v4.30.1

workdir: ~/transformers

setup: |

pip install -e .

cd examples/pytorch/question-answering/

pip install -r requirements.txt torch==1.12.1+cu113 --extra-index-url https://download.pytorch.org/whl/cu113

pip install wandb

run: |

cd examples/pytorch/question-answering/

python run_qa.py \

--model_name_or_path bert-base-uncased \

--dataset_name squad \

--do_train \

--do_eval \

--per_device_train_batch_size 12 \

--learning_rate 3e-5 \

--num_train_epochs 50 \

--max_seq_length 384 \

--doc_stride 128 \

--report_to wandb \

--output_dir /tmp/bert_qa/

Note

Workdir and file mounts with local files will be automatically uploaded to a cloud bucket. The bucket will be cleaned up after the job finishes.

To launch this YAML as a managed job, use sky jobs launch:

$ sky jobs launch -n bert-qa-job bert_qa.yaml

To see all flags, you can run sky jobs launch --help or see the CLI reference for more information.

SkyPilot will launch and start monitoring the job.

Under the hood, SkyPilot spins up a temporary cluster for the job.

If a spot preemption or any machine failure happens, SkyPilot will automatically search for resources across regions and clouds to re-launch the job.

Resources are cleaned up as soon as the job is finished.

Tip

You can test your YAML on unmanaged sky launch , then do a production run as a managed job using sky jobs launch.

sky launch and sky jobs launch have a similar interface, but are useful in different scenarios.

|

|

|---|---|

Long-lived, manually managed cluster |

Dedicated auto-managed cluster for each job |

Spot preemptions must be manually recovered |

Spot preemptions are auto-recovered |

Number of parallel jobs limited by cluster resources |

Easily manage hundreds or thousands of jobs at once |

Good for interactive dev |

Good for scaling out production jobs |

Work with managed jobs#

For a list of all commands and options, run sky jobs --help or read the CLI reference.

See a list of managed jobs:

$ sky jobs queue

Fetching managed jobs...

Managed jobs:

ID NAME RESOURCES SUBMITTED TOT. DURATION JOB DURATION #RECOVERIES STATUS

2 roberta 1x [A100:8][Spot] 2 hrs ago 2h 47m 18s 2h 36m 18s 0 RUNNING

1 bert-qa 1x [V100:1][Spot] 4 hrs ago 4h 24m 26s 4h 17m 54s 0 RUNNING

This command shows 50 managed jobs by default, use --limit <num> to show more jobs or use --all to show all jobs.

Stream the logs of a running managed job:

$ sky jobs logs -n bert-qa # by name

$ sky jobs logs 2 # by job ID

Cancel a managed job:

$ sky jobs cancel -n bert-qa # by name

$ sky jobs cancel 2 # by job ID

Note

If any failure happens for a managed job, you can check sky jobs queue -a for the brief reason

of the failure. For more details related to provisioning, check sky jobs logs --controller <job_id>.

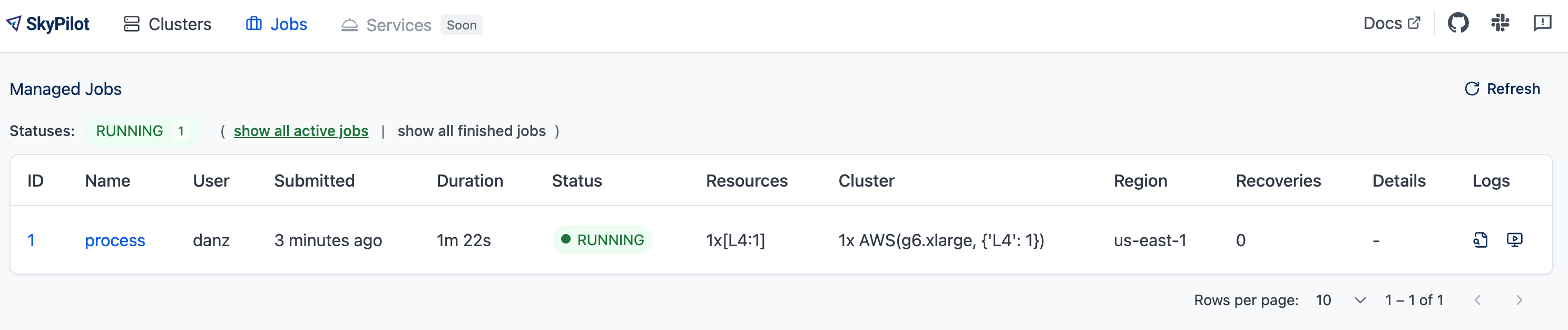

Viewing jobs in dashboard#

The SkyPilot dashboard, sky dashboard has a Jobs page that shows all managed jobs.

The UI shows the same information as the CLI sky jobs queue -au.

Running on spot instances#

Managed jobs can run on spot instances, and preemptions are auto-recovered by SkyPilot.

To run on spot instances, use sky jobs launch --use-spot, or specify use_spot: true in your SkyPilot YAML.

name: spot-job

resources:

accelerators: A100:8

use_spot: true

run: ...

Tip

Spot instances are cloud VMs that may be “preempted”. The cloud provider can forcibly shut down the underlying VM and remove your access to it, interrupting the job running on that instance.

In exchange, spot instances are significantly cheaper than normal instances that are not subject to preemption (so-called “on-demand” instances). Depending on the cloud and VM type, spot instances can be 70-90% cheaper.

SkyPilot automatically finds available spot instances across regions and clouds to maximize availability. Any spot preemptions are automatically handled by SkyPilot without user intervention.

Note

By default, a job will be restarted from scratch after each preemption recovery. To avoid redoing work after recovery, implement checkpointing and recovery. Your application code can checkpoint its progress periodically to a mounted cloud bucket. The program can then reload the latest checkpoint when restarted.

Here is an example of a training job failing over different regions across AWS and GCP.

Quick comparison between managed spot jobs vs. launching unmanaged spot clusters:

Command |

Managed? |

SSH-able? |

Best for |

|---|---|---|---|

|

Yes, preemptions are auto-recovered |

No |

Scaling out long-running jobs (e.g., data processing, training, batch inference) |

|

No, preemptions are not handled |

Yes |

Interactive dev on spot instances (especially for hardware with low preemption rates) |

Either spot or on-demand/reserved#

By default, on-demand instances will be used (not spot instances). To use spot instances, you must specify --use-spot on the command line or use_spot: true in your SkyPilot YAML.

However, you can also tell SkyPilot to use both spot instance and on-demand instances, depending on availability. In your SkyPilot YAML, use any_of to specify either spot or on-demand/reserved instances as

candidate resources for a job. See documentation here for more details.

resources:

accelerators: A100:8

any_of:

- use_spot: true

- use_spot: false

In this example, SkyPilot will choose the cheapest resource to use, which almost certainly will be spot instances. If spot instances are not available, SkyPilot will fall back to launching on-demand/reserved instances.

Checkpointing and recovery#

To recover quickly from spot instance preemptions, a cloud bucket is typically needed to store the job’s states (e.g., model checkpoints). Any data on disk that is not stored inside a cloud bucket will be lost during the recovery process.

Below is an example of mounting a bucket to /checkpoint:

file_mounts:

/checkpoint:

name: # NOTE: Fill in your bucket name

mode: MOUNT_CACHED # or MOUNT

To learn more about the different modes, see SkyPilot bucket mounting and high-performance training.

Real-world examples#

See the Model training guide for more training examples and best practices.

Jobs restarts on user code failure#

Preemptions or hardware failures will be auto-recovered, but by default, user code failures (non-zero exit codes) are not auto-recovered.

In some cases, you may want a job to automatically restart even if it fails in application code. For instance, if a training job crashes due to an NVIDIA driver issue or NCCL timeout, it should be recovered. To specify this, you

can set max_restarts_on_errors in resources.job_recovery in the SkyPilot YAML.

resources:

accelerators: A100:8

job_recovery:

# Restart the job up to 3 times on user code errors.

max_restarts_on_errors: 3

This will restart the job, up to 3 times (for a total of 4 attempts), if your code has any non-zero exit code. Each restart runs on a newly provisioned temporary cluster.

Recovering on specific exit codes#

You can also specify a list of exit codes that should always trigger recovery, regardless of the max_restarts_on_errors limit. This is useful when certain exit codes indicate transient errors that should always be retried (e.g., NCCL timeouts, specific GPU driver issues).

resources:

accelerators: A100:8

job_recovery:

max_restarts_on_errors: 3

# Always recover if the job exits with code 33 or 34.

# In a multi-node job, recovery is triggered if any node exits with a code in [33, 34].

# Can also use a single integer: recover_on_exit_codes: 33

recover_on_exit_codes: [33, 34]

In this configuration:

If the job exits with code 33 or 34, it will be recovered. Restarts triggered by these specific exit codes do not count towards the max_restarts_on_errors limit.

For any other non-zero exit code, the job will be recovered up to 3 times (as specified by

max_restarts_on_errors)

Note

For multi-node jobs, recovery is triggered if any node exits with a code in recover_on_exit_codes.

Warning

You should not use exit code 137 in recover_on_exit_codes. This code is used internally by SkyPilot and including it may interfere with proper recovery behavior.

When will my job be recovered?#

Here’s how various kinds of failures will be handled by SkyPilot:

User code fails ( |

If the exit code is in |

Instances are preempted or underlying hardware fails: |

Tear down the old temporary cluster and provision a new one in another region, then restart the job. |

Can’t find available resources due to cloud quota or capacity restrictions: |

Try other regions and other clouds indefinitely until resources are found. |

Cloud config/auth issue or invalid job configuration: |

Mark the job as |

To see the logs of user code (setup or run commands), use sky jobs logs <job_id>. If there is a provisioning or recovery issue, you can see the provisioning logs by running sky jobs logs --controller <job_id>.

Tip

Under the hood, SkyPilot uses a “controller” to provision, monitor, and recover the underlying temporary clusters. See How it works: The jobs controller.

Scaling to many jobs#

You can easily manage dozens, hundreds, or thousands of managed jobs at once. This is a great fit for batch jobs such as data processing, batch inference, or hyperparameter sweeps. To see an example launching many jobs in parallel, see Many Parallel Jobs.

Tip

For workloads that can reuse the same environment across many jobs, consider using Pools. Pools provide faster cold-starts by maintaining a set of pre-provisioned workers that can be reused across job submissions.

To increase the maximum number of jobs that can run at once, see Best practices for scaling up the jobs controller.

Managed pipelines#

A pipeline is a managed job that contains a sequence of tasks running one after another.

This is useful for running a sequence of tasks that depend on each other, e.g., training a model and then running inference on it. Different tasks can have different resource requirements to use appropriate per-task resources, which saves costs, while keeping the burden of managing the tasks off the user.

See also

Job Groups for running multiple tasks in parallel instead of sequentially.

Note

In other words, a managed job is either a single task, a pipeline (sequential tasks), or a job group (parallel tasks). All managed jobs are submitted by sky jobs launch.

To run a pipeline, specify the sequence of tasks in a YAML file. Here is an example:

name: pipeline

---

name: train

resources:

accelerators: V100:8

any_of:

- use_spot: true

- use_spot: false

file_mounts:

/checkpoint:

name: train-eval # NOTE: Fill in your bucket name

mode: MOUNT

setup: |

echo setup for training

run: |

echo run for training

echo save checkpoints to /checkpoint

---

name: eval

resources:

accelerators: T4:1

use_spot: false

file_mounts:

/checkpoint:

name: train-eval # NOTE: Fill in your bucket name

mode: MOUNT

setup: |

echo setup for eval

run: |

echo load trained model from /checkpoint

echo eval model on test set

The YAML above defines a pipeline with two tasks. The first name:

pipeline names the pipeline. The first task has name train and the

second task has name eval. The tasks are separated by a line with three

dashes ---. Each task has its own resources, setup, and

run sections. Tasks are executed sequentially. If a task fails, later tasks are skipped.

Tip

To explicitly indicate a pipeline (sequential execution), you can add

execution: serial to the header. This is optional since pipelines

are the default when execution is omitted. Use execution: parallel

for job groups instead.

To pass data between the tasks, use a shared file mount. In this example, the train task writes its output to the /checkpoint file mount, which the eval task is then able to read from.

To submit the pipeline, the same command sky jobs launch is used. The pipeline will be automatically launched and monitored by SkyPilot. You can check the status of the pipeline with sky jobs queue or sky dashboard.

$ sky jobs launch -n pipeline pipeline.yaml

$ sky jobs queue

Fetching managed job statuses...

Managed jobs

In progress jobs: 1 RECOVERING

ID TASK NAME REQUESTED SUBMITTED TOT. DURATION JOB DURATION #RECOVERIES STATUS

8 pipeline - 50 mins ago 47m 45s - 1 RECOVERING

↳ 0 train 1x [V100:8][Spot|On-demand] 50 mins ago 47m 45s - 1 RECOVERING

↳ 1 eval 1x [T4:1] - - - 0 PENDING

Note

The $SKYPILOT_TASK_ID environment variable is also available in the run section of each task. It is unique for each task in the pipeline.

For example, the $SKYPILOT_TASK_ID for the eval task above is:

“sky-managed-2022-10-06-05-17-09-750781_pipeline_eval_8-1”.

File uploads for managed jobs#

For managed jobs, SkyPilot uses an intermediate bucket to store files used in the task, such as local file_mounts and the workdir.

If you do not configure a bucket, SkyPilot will automatically create a temporary bucket named skypilot-filemounts-{username}-{run_id} for each job launch. SkyPilot automatically deletes the bucket after the job completes.

Object store access is not necessary to use managed jobs. If cloud object storage is not available (e.g., Kubernetes deployments), SkyPilot automatically falls back to a two-hop upload that copies files to the jobs controller and then downloads them to the jobs.

Tip

To force disable using cloud buckets even when available, set jobs.force_disable_cloud_bucket in your config:

# ~/.sky/config.yaml

jobs:

force_disable_cloud_bucket: true

Setting the job files bucket#

If you want to use a pre-provisioned bucket for storing intermediate files, set jobs.bucket in ~/.sky/config.yaml:

# ~/.sky/config.yaml

jobs:

bucket: s3://my-bucket # Supports s3://, gs://, https://<azure_storage_account>.blob.core.windows.net/<container>, r2://, cos://<region>/<bucket>

If you choose to specify a bucket, ensure that the bucket already exists and that you have the necessary permissions.

When using a pre-provisioned intermediate bucket with jobs.bucket, SkyPilot creates job-specific directories under the bucket root to store files. They are organized in the following structure:

# cloud bucket, s3://my-bucket/ for example

my-bucket/

├── job-15891b25/ # Job-specific directory

│ ├── local-file-mounts/ # Files from local file mounts

│ ├── tmp-files/ # Temporary files

│ └── workdir/ # Files from workdir

└── job-cae228be/ # Another job's directory

├── local-file-mounts/

├── tmp-files/

└── workdir/

When using a custom bucket (jobs.bucket), the job-specific directories (e.g., job-15891b25/) created by SkyPilot are removed when the job completes.

Tip

Multiple users can share the same intermediate bucket. Each user’s jobs will have their own unique job-specific directories, ensuring that files are kept separate and organized.

How it works: The jobs controller#

The jobs controller is a small on-demand CPU VM or pod created by SkyPilot to manage all jobs. It is automatically launched when the first managed job is submitted, and it is autostopped after it has been idle for 10 minutes (i.e., after all managed jobs finish and no new managed job is submitted in that duration). Thus, no user action is needed to manage its lifecycle.

Note

If you are using a SkyPilot API server, you can run the controller within the same pod as your API server by enabling consolidation mode.

You can see the controller with sky status -u and refresh its status by using the -r/--refresh flag.

While the cost of the jobs controller is negligible (~$0.25/hour when running and less than $0.004/hour when stopped),

you can still tear it down manually with

sky down <job-controller-name>, where the <job-controller-name> can be found in the output of sky status -u.

Note

Tearing down the jobs controller loses all logs and status information for the finished managed jobs. It is only allowed when there are no in-progress managed jobs to ensure no resource leakage.

To adjust the size of the jobs controller instance, see Customizing jobs controller resources.

High availability controller#

High availability mode ensures the controller for Managed Jobs remains resilient to failures by running it as a Kubernetes Deployment with automatic restarts and persistent storage. This helps maintain management capabilities even if the controller pod crashes or the node fails.

To enable high availability for Managed Jobs, simply set the high_availability flag to true under jobs.controller in your ~/.sky/config.yaml, and ensure the controller runs on Kubernetes:

jobs:

controller:

resources:

cloud: kubernetes

high_availability: true

This will deploy the controller as a Kubernetes Deployment with persistent storage, allowing automatic recovery on failures. For prerequisites, setup steps, and recovery behavior, see the detailed page: High Availability Controller.

Setup and best practices#

Using long-lived credentials#

Since the jobs controller is a long-lived instance that will manage other cloud instances, it’s best to use static credentials that do not expire. If a credential expires, it could leave the controller with no way to clean up a job, leading to expensive cloud instance leaks. For this reason, it’s preferred to set up long-lived credential access, such as a ~/.aws/credentials file on AWS, or a service account json key file on GCP.

To use long-lived static credentials for the jobs controller, just make sure the right credentials are in use by SkyPilot. They will be automatically uploaded to the jobs controller. If you’re already using local credentials that don’t expire, no action is needed.

To set up credentials:

AWS: Create a dedicated SkyPilot IAM user and use a static

~/.aws/credentialsfile.GCP: Create a GCP service account with a static JSON key file.

Other clouds: Make sure you are using credentials that do not expire.

Customizing jobs controller resources#

You may want to customize the jobs controller resources for several reasons:

Increasing the maximum number of jobs that can be run concurrently, which is based on the controller’s memory allocation. (Default: ~600, see best practices)

Use a lower-cost controller (if you have a low number of concurrent managed jobs).

Enforcing the jobs controller to run on a specific location. (Default: cheapest location)

Changing the disk_size of the jobs controller to store more logs. (Default: 50GB)

To achieve the above, you can specify custom configs in ~/.sky/config.yaml with the following fields:

jobs:

# NOTE: these settings only take effect for a new jobs controller, not if

# you have an existing one.

controller:

resources:

# All configs below are optional.

# Specify the location of the jobs controller.

infra: gcp/us-central1

# Bump cpus to allow more managed jobs to be launched concurrently. (Default: 4+)

cpus: 8+

# Bump memory to allow more managed jobs to be running at once.

# By default, it scales with CPU (4x).

memory: 64+

# Specify the disk_size in GB of the jobs controller.

disk_size: 100

The resources field has the same spec as a normal SkyPilot job; see here.

Note

These settings will not take effect if you have an existing controller (either stopped or live). For them to take effect, tear down the existing controller first, which requires all in-progress jobs to finish or be canceled.

To see your current jobs controller, use sky status -u.

$ sky status -u --refresh

Clusters

NAME INFRA RESOURCES STATUS AUTOSTOP LAUNCHED

my-cluster-1 AWS (us-east-1) 1x(cpus=16, m6i.4xlarge, ...) STOPPED - 1 week ago

my-other-cluster GCP (us-central1) 1x(cpus=16, n2-standard-16, ...) STOPPED - 1 week ago

sky-jobs-controller-919df126 AWS (us-east-1) 1x(cpus=4, m6i.xlarge, disk_size=50) STOPPED 10m 1 day ago

Managed jobs

No in-progress managed jobs.

Services

No live services.

In this example, you can see the jobs controller (sky-jobs-controller-919df126) is an m6i.xlarge on AWS, which is the default size.

To tear down the current controller, so that new resource config is picked up, use sky down.

$ sky down sky-jobs-controller-919df126

WARNING: Tearing down the managed jobs controller. Please be aware of the following:

* All logs and status information of the managed jobs (output of `sky jobs queue`) will be lost.

* No in-progress managed jobs found. It should be safe to terminate (see caveats above).

To proceed, please type 'delete': delete

Terminating cluster sky-jobs-controller-919df126...done.

Terminating 1 cluster ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 100% 0:00:00

The next time you use sky jobs launch, a new controller will be created with the updated resources.

Best practices for scaling up the jobs controller#

Tip

For managed jobs, it’s highly recommended to use long-lived credentials for cloud authentication. This is so that the jobs controller credentials do not expire. This is particularly important in large production runs to avoid leaking resources.

The number of active jobs that the controller supports is based on the controller size. There are two limits that apply:

Actively launching job count: limit is

8 * floor((memory - 2GiB) / 3.59GiB), with a maximum of 512 jobs. A job counts towards this limit when it is first starting, launching instances, or recovering.The default controller size has 16 GiB memory, meaning 24 jobs can be actively launching at once.

Running job count: limit is

200 * floor((memory - 2GiB) / 3.59GiB), with a maximum of 2000 jobs.The default controller size supports up to 600 jobs running in parallel.

The default size is appropriate for most moderate use cases, but if you need to run hundreds or thousands of jobs at once, you should increase the controller size. Each additional ~3.6 GiB of controller memory adds capacity for 8 concurrent launches and 200 concurrently running jobs.

Increase CPU modestly as memory grows to keep controller responsiveness high, but note that the hard parallelism limits are driven by available memory. A ratio of 4 GiB memory per CPU works well in our testing.

For absolute maximum parallelism, the following per-cloud configurations are recommended:

jobs:

controller:

resources:

infra: aws

cpus: 192

memory: 4x

disk_size: 500

jobs:

controller:

resources:

infra: gcp

cpus: 128

memory: 4x

disk_size: 500

jobs:

controller:

resources:

infra: azure

cpus: 96

memory: 4x

disk_size: 500

Note

Remember to tear down your controller to apply these changes, as described above.

With this configuration, you can launch up to 512 jobs at once. Once the jobs are launched, up to 2000 jobs can be running in parallel.

Run the controller within the API server#

If you have deployed a remote API server, you can avoid needing to launch a separate VM/pod for the controller. We call this deployment mode “consolidation mode”, as the API server and jobs controller are consolidated onto the same pod.

Warning

Because the jobs controller must stay alive to manage running jobs, it’s required to use an external API server to enable consolidation mode.

Consolidating the API server and the jobs controller has a few advantages:

6x faster job submission.

Consistent cloud/Kubernetes credentials across the API server and jobs controller.

Persistent managed job state using the same database as the API server, e.g., PostgreSQL.

No extra VM/pod is needed for the jobs controller, saving cost.

To enable the consolidated deployment, set consolidation_mode in the API server config.

jobs:

controller:

consolidation_mode: true

# any specified resources will be ignored

Note

You must restart the API server after making this change for it to take effect.

# Update NAMESPACE / RELEASE_NAME if you are using custom values.

NAMESPACE=skypilot

RELEASE_NAME=skypilot

# Restart the API server to pick up the config change

kubectl -n $NAMESPACE rollout restart deployment $RELEASE_NAME-api-server

See more about the Kubernetes upgrade strategy of the API server.

Warning

When using consolidation mode with a remote SkyPilot API server with RollingUpdate upgrade strategy, any file mounts or workdirs that upload local files/folders of the managed jobs will be lost during a rolling update. To address that, use bucket, volume, or git; or, configure a cloud bucket for all local files via jobs.bucket in your SkyPilot config to persist them.

jobs:

bucket: s3://xxx

The jobs controller will use a bit of overhead - it reserves an extra 2GB of memory for itself, which may reduce the amount of requests your API server can handle. To counteract, you can increase the amount of CPU and memory allocated to the API server: See Tuning API server resources.